Aws Lambda Trigger on Upload File From S3 Node Js and Store in Dynamo Db

![]()

Serverless (AWS) - How to trigger a Lambda function from a S3 saucepan, and use it to update a DDB.

What is a Lambda on AWS?

If yous are a programmer or have some programming background, yous may be familiar with Lambda functions.

They are a useful feature in many of the most popular modern languages, and they basically are functions that lack an identifier, which is why they are also called anonymous functions. This last proper name may not be appropriate for certain languages, where not all anonymous functions are necessary Lambda functions, but that's a discussion for some other twenty-four hours.

Aws Lambda takes inspiration from this concept, but it'south fundamentally different. For starters, AWS Lambda is a service that lets yous run code without having to provision servers, or even EC2 instances. As such, it is 1 of the cornerstones of Amazon's serverless services, aslope Api-Gateway, DynamoDB and S3, to proper noun a few.

Aws Lambda functions are consequence-driven architecture, and as such they can exist triggered and executed by a wide diversity of events. On this article, we volition create a Lambda function and configure it to trigger based whenever an object is put inside of an S3 bucket. Then we'll use it to update a DynamoDB table.

Getting Started

We'll start on the AWS console. For this piffling project, we'll demand an S3 bucket, so let's go ahead and create it.

In case you haven't heard of it, S3 stands for Simple Storage Service, and allows us to store data equally objects inside of structures named "Buckets".

Then we'll search for S3 on the AWS panel and create a bucket.

Today we don't need annihilation fancy then allow'due south just give it a unique name.

We will as well need a DynamoDB table. DynamoDB is Amazon's serverless no-SQL database solution, and information technology we volition utilize it to continue rail of the files uploaded in our bucket.

We'll become into "Create Tabular array", and then give it a name and a partition key.

We could too requite information technology a sort key, similar the saucepan where the file is stored. That fashion we could receive a list ordered past bucket when we do a query or scan. Even so, that is non our focus today. And since nosotros are using only the 1 saucepan, we'll get out it unchecked.

Next, we'll search for Lambda on the console.

Here we tin can cull to make a lambda from scratch, or build it using ane of the many great templates provided by Amazon. We can too source it from a container or a repository. This time we'll kickoff from scratch and brand with the latest version of Node.js.

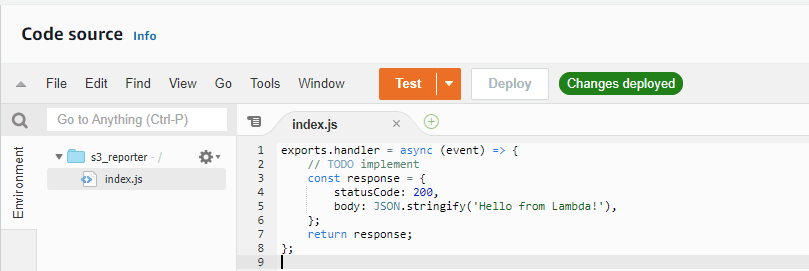

We will be greeted by a office similar to this one

It's simply a simple role that returns an "OK" whenever it'south triggered. Those of you familiar with Node may be wondering about that "handler" on the outset line.

Anatomy of a Lambda function.

The handler is sort of an entry point for your Lambda. When the part is invoked, the handler method will be executed. The handler function likewise receives two parameters: upshot and context.

Event is JSON object which provides consequence-related information, which your Lambda tin utilize to perform tasks. Context, on the other hand, contains methods and properties that let you to get more information about your function and its invocation.

If at any point you want to exam what your Lambda function is doing, y'all can printing on the "Test" button, and try its functionality with parameters of your choosing.

We know we want our Lambda to be triggered each time somebody uploads an object to a specific S3 buckets. Nosotros could configure the saucepan, then upload a file to encounter kind of data that our function receives. Fortunately, AWS already has a test even that mimics an s3 PUT operation trigger.

Nosotros just need to select the dropdown on the "Test" button, and select the "Amazon S3 Put" template".

var AWS = crave("aws-sdk"); exports.handler = async (event) => { //Get event info. permit saucepan = event.Records[0].s3 let obj = saucepan.object let params = { TableName: "files", Item : { file_name : obj.primal, bucket_name: saucepan.bucket.name, bucket_arn: bucket.bucket.arn } } //Define our target DB. let newDoc = new AWS.DynamoDB.DocumentClient( { region: "us-east-1"}); //Send the asking and return the response. endeavor { let result = await newDoc.put(params).promise() let res = { statusCode : result.statusCode, body : JSON.stringify(result) } return res } catch (mistake) { let res = { statusCode : mistake.statusCode, trunk : JSON.stringify(error) } render res } }

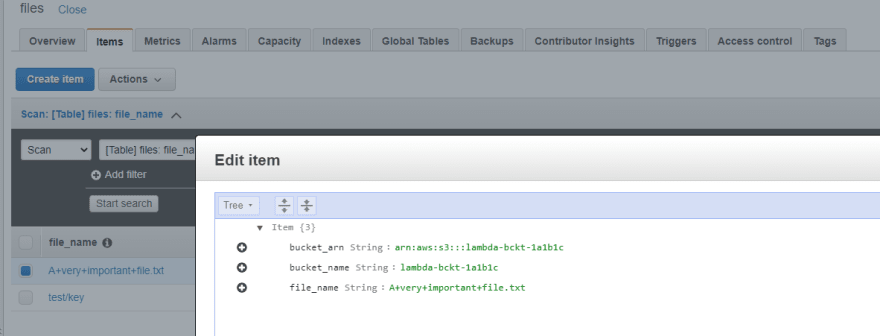

This simple code will capture event info and enter information technology into our DynamoDB table.

However, if you get alee and execute it, you will probably receive an error stating that our Lambda function doesn't have the required permission to run this operation.

So we'll but caput to IAM. Hopefully we'll be security-conscious and create a office with the minimum needed permissions for our Lambda.

Simply kidding, for this tutorial full DynamoDB admission will do!

Now everything should work. Permit'south publish our Lambda and upload a file to our S3 bucket and examination it out!

Everything is working as it should!

Now, what we did was pretty basic, but this basic workflow (probably backed past Api Gateway or orchestrated with Step Functions) can brand the basis of a pretty complex serverless app.

Thank you for reading!

cromptonthold1963.blogspot.com

Source: https://dev.to/marianoramborger/serverless-aws-how-to-trigger-a-lambda-function-from-a-s3-bucket-and-use-it-to-update-a-ddb-2m0a

Enregistrer un commentaire for "Aws Lambda Trigger on Upload File From S3 Node Js and Store in Dynamo Db"